Apache JMeter is probably the most popular and best known load testing tool out there. And for good reasons: it is Open Source, it supports almost any protocol you can imagine, it has more than 20 years of history and works on all platforms.

Unfortunately it also has its downsides: in my opinion learning to use JMeter takes a lot of time with so many options, the UI is not specially friendly and there is a «language» you have to know to do more «advanced» things like generating random dates or numbers for example:

${__Random(0,10)}But learning to use a tool takes time, that’s not new. Why would JMeter be any different? Even if there is an alternative, learning to use it will also take time right? Well, maybe not, maybe you already know how to use that alternative!

Load Testing

https://estructurando.net/2019/03/25/prueba_de_carga_viaducto_del_tajo/

When we talk about software, load testing usually consists of simulating real traffic on a service to see how it behaves. The goal is to discover potential bugs and weaknesses that only appear when multiple users interact with the service concurrently but it can also be useful as a way to measure performance in a more realistic scenario before going into production.

Today we will focus on HTTP services so we will be doing requests in parallel. But load testing is different depending on the service you are working with: it could be processing a very big file or getting bombarded with thousands of Kafka events for example.

A JMeter alternative: Locust

Locust is very different from JMeter. They both do load testing but that is pretty much all they have in common. Instead of a UI full of options Locust has code. Python code to be precise. Yes, there is a UI (a beautiful one in fact) but it is for visualization, not for test configuration.

Remember when I said that maybe you already know how to use this JMeter alternative? Well, if you know Python you already know 90% of Locust.

Wait! Don’t panic! Even if you don’t know Python. This is how a test looks like in Locust:

from locust import HttpUser, task

class ExampleTest(HttpUser):

@task

def home(self):

self.client.request(method="GET", url="/home")

That is a very simple but real example of a test you can run with Locust. Let me save it as example-test.py and run it for you, so we can see the UI.

Oh by the way, you will need to install Locust first of course. It is pretty easy. Once you have it you can run the test with:

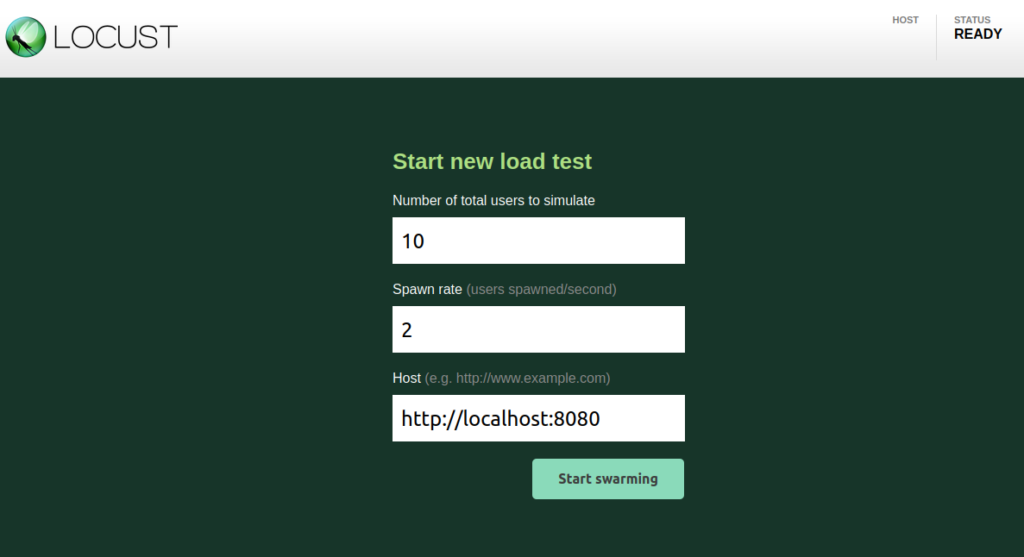

locust -f example-test.pyThat will start Locus so we can now open http://localhost:8089 in the browser and enter a few parameters to launch the test:

We are going to simulate 10 users. That is up to 10 concurrent request at any time against a local server on port 8080 and our «users» will be targeting a /home endpoint non-stop as defined in our @task before:

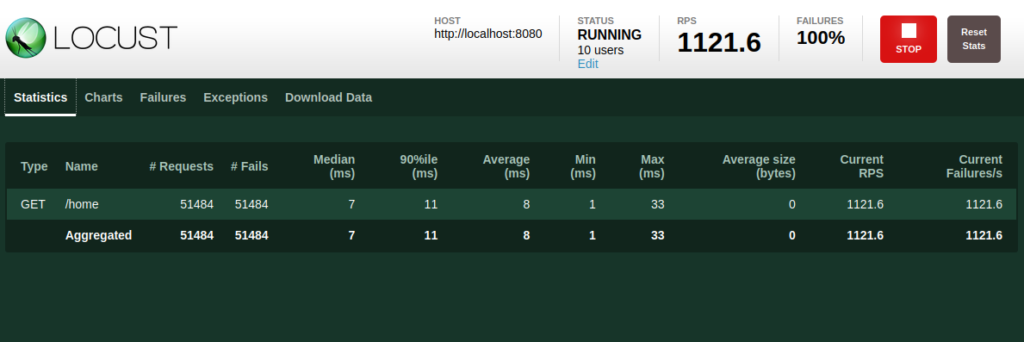

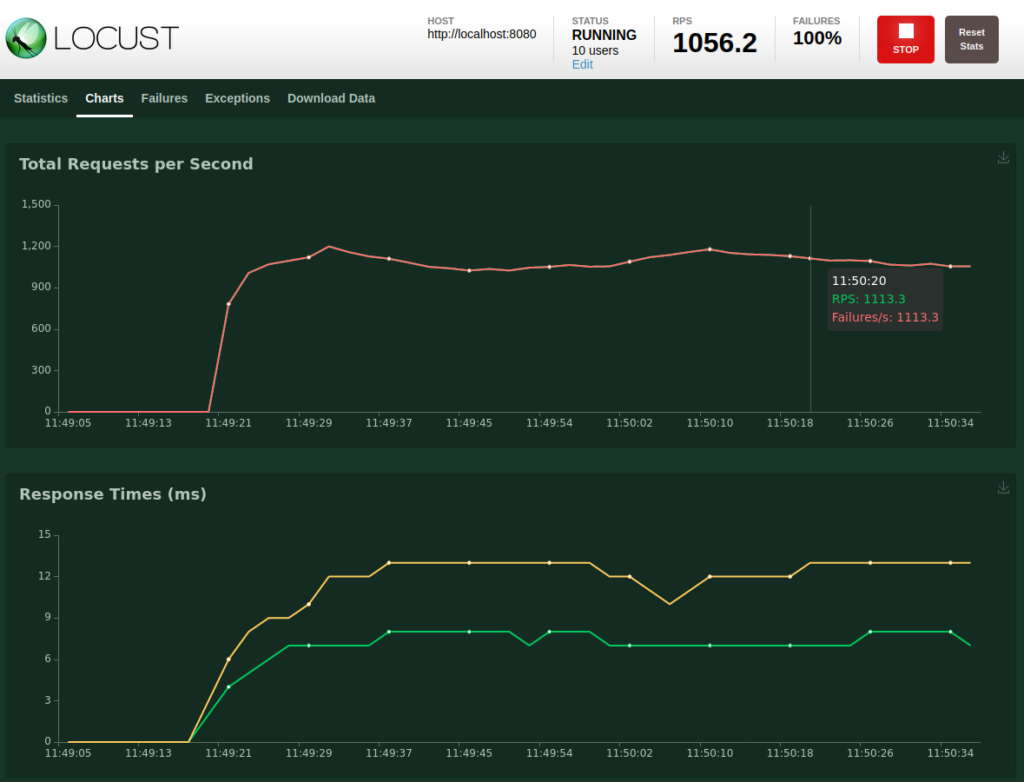

As we can see all request are failing. Well, that’s because I have absolutely nothing running on port 8080 but this was just an example. Let’s stop the test and do something actually useful.

Creating a Locust Test

Imagine that we had a Twitter-like website with mostly two endpoints:

- /timeline to see all the messages sorted by publication date

- /shoutout to post a new message everyone can see

And we want to test how it behaves when many users are refreshing their timelines and posting new messages.

Our Locust test could look like this:

from locust import HttpUser, task

class WebsiteUser(HttpUser):

@task

def timeline(self):

self.client.get("/timeline")

@task

def shoutout(self):

self.client.post("/shoutout", {"username": "testuser", "message": "hello!"})

A Locust user can have multiple @tasks. It will pick one at random every time for the duration of the test. So, if we run the test with 10 users, 50% of them will be loading the timeline and the other 50% will be posting new messages.

Let’s change that, we expect our users to load the timeline with much higher frequency than they will be posting messages. We can easily change the probability of a task with:

@task(10)

def timeline(self):

self.client.get("/timeline")Nice, the timeline task is now 10 times more likely to happen than the shoutout one.

Let’s also add a delay in between tasks, so our users behave more like humans. Something in between 1 and 3 seconds for example:

wait_time = between(1, 3)Looking good, how about we give random names to our users and let’s also make all messages random too, instead of just «hello!».

I am a lazy programmer so I will just grab a couple libraries to help me do this. Yes, a Locust test is just Python code so you can use libraries:

- to generate random names: https://pypi.org/project/names/

- to generate random messages: https://pypi.org/project/lorem-text/

Perfect, this is how the test looks like with all the changes:

from locust import HttpUser, task, between

from lorem_text import lorem

import names

class WebsiteUser(HttpUser):

username = None

wait_time = between(1, 3)

@task(10)

def timeline(self):

self.client.get("/timeline")

@task

def shoutout(self):

message = lorem.words(10)

self.client.post("/shoutout", {"username": self.username, "message": message})

def on_start(self):

self.username = names.get_full_name()

Twenty lines of code, not bad uh? And pretty easy to understand too. Imagine doing this with JMeter. And imagine if you had to maintain that test if someone else had configured it. Yeah, good luck with that.

Conclusion

We have only scratched the surface with this example. Locust can do validations on the response, we can have many users on the same test to simulate different flows, we can use tags for our tasks, persist cookies for our users, run a test from multiple machines (yeah, like a cluster!), generate reports and CSVs…

Quite complete. If you want to know more I encourage you to have a look at the official Locust documentation, it is great.

Oh and it is worth mentioning that Locust is Free Software (MIT License), you can have a look at Github repo and give them a star!

If you have any questions or want to say something feel free to leave a comment below. I hope it was useful, enjoy swarming your services!